Services and Infrastructures

The Infrastructure and Services Department is responsible for the Data Center Facilities and the Storage and Computing Services PIC hosts. IT professionals working in this department also perform technical support and new developments for PIC’s users in order to adjust our services to the user needs.

Computing

PIC is a throughput-oriented site, where latency is not so important but final throughput is the critical point. This means that it is more important for us to finish as many jobs as we can, even if that means individuals jobs can take much more time to finish. Thus, instead of having a huge supercomputer with low-latency links as the way of getting our computing power, our approach is having replication of many small commodity units that, when working together, achieve our goal. However, we renew our hardware often to offer the last and faster processors in the market.

The hardware solution is currently based on dual twin equipment from Supermicro, Intel and Dell (as of October 2023) 160 nodes with 11000 computing slots available. Most of this servers are immerse in the liquid cooling solution CarnoJet system from Green Revolution Cooling.

A part from the computing nodes, PIC offers all the resources required for grid computing: user interfaces, computing and publication elements.

Storage – Tape

The tape infrastructure at PIC is provisioned through a TS4500 IBM library, providing around 6000 tape slots which are expected to cover the PIC tape needs in the coming years. Enstore is the mass storage system (developed at Fermilab) managing almost 65PB of tape storage and providing distributed access to millions of files.

The supported technologies are LTO-8 and LTO-9 and we have plans to double our existing capacity in 2024 and 2025.

Storage – Disk

PIC disk storage is based on dCache, an open source project that can show all our disk servers and to serve them in a unique software area (filesystem). It supports a large set of standard protocols (WebDAV, FTP, SRM, NFSv41, XRootD, …) for the data repository and its namespace.

Current managed data is ~20PB and we serve disk space for the CMS, ATLAS and LHCb experiment. Also, PIC also operates the disk storage for the IFAE ATLAS Tier2 and Tier3, which is the 25% of the Federated Spanish ATLAS Tier2.

Apart from the LHC projects, PIC acts as Tier0 of the MAGIC and PAU experiments, is one of ten science data centers of the Euclid consortium and is ramping up the data acquisiton for the CTA telescope prototype.

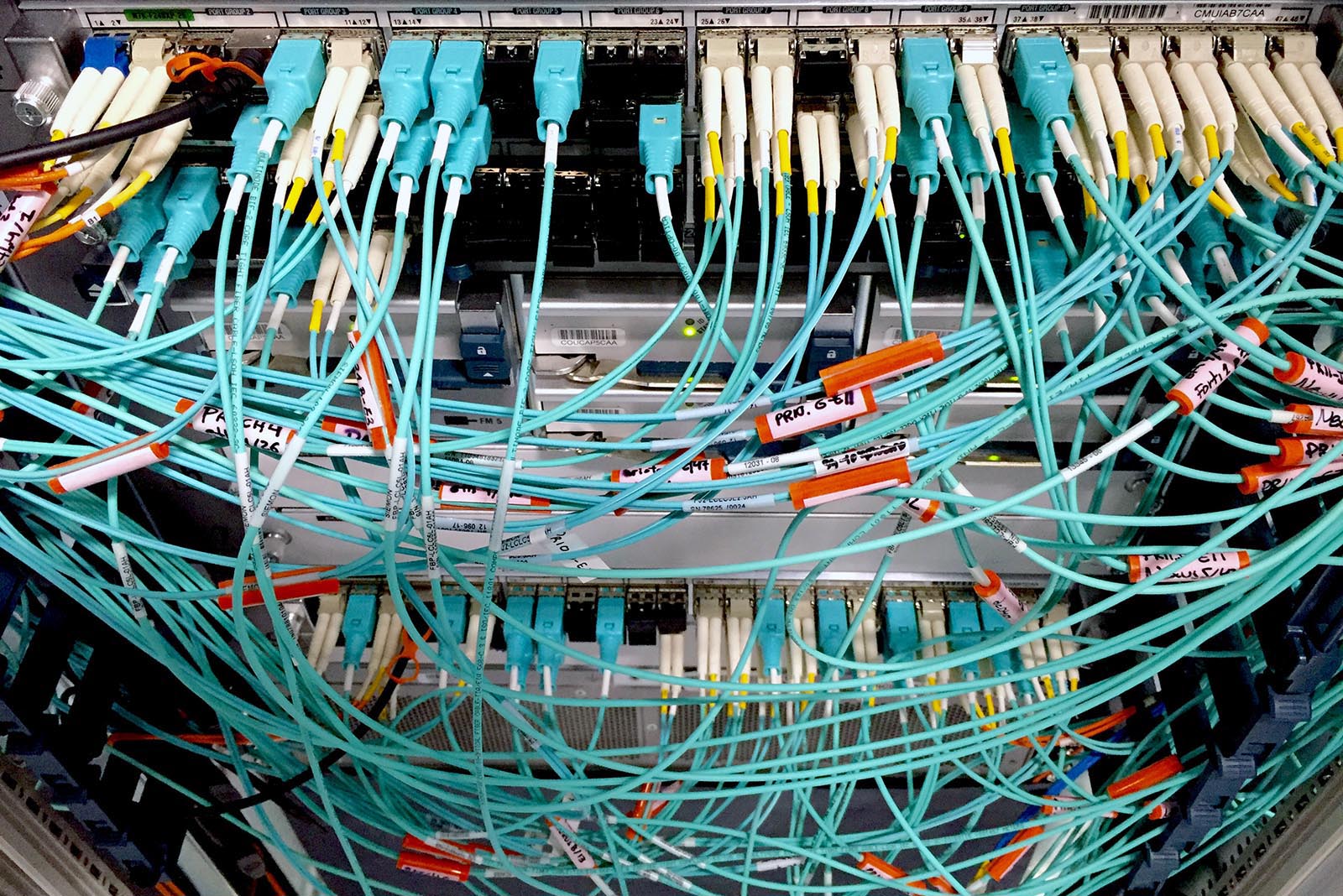

Network

PIC is connected to the other center through a 200Gbps physical connection shared with the following services:

PIC is connected to the other center through a 200Gbps physical connection shared with the following services:

- LHCOPN (LHC Optical Network): Connection with CERN and the rest of LHC Tier1 centers using a private network

- LHCONE (LHC Open Network Environment): Connection with the LHC Tier2 centers using a private network

- Dedicated 10Gbps network link to El Roque de los Muchachos (IAC)

- Internet connection for users and other experiments that transfer data to PIC

All the connections have a link set up given by the Anella Científica, organization that also connects the center with RedIRIS.

Particle Physics

The office coordinates and provides support to LHC activities (ATLAS, CMS, LHCb), Neutrino Physics experiments, VIP (Voxel Imaging PET), and many other (small) projects.

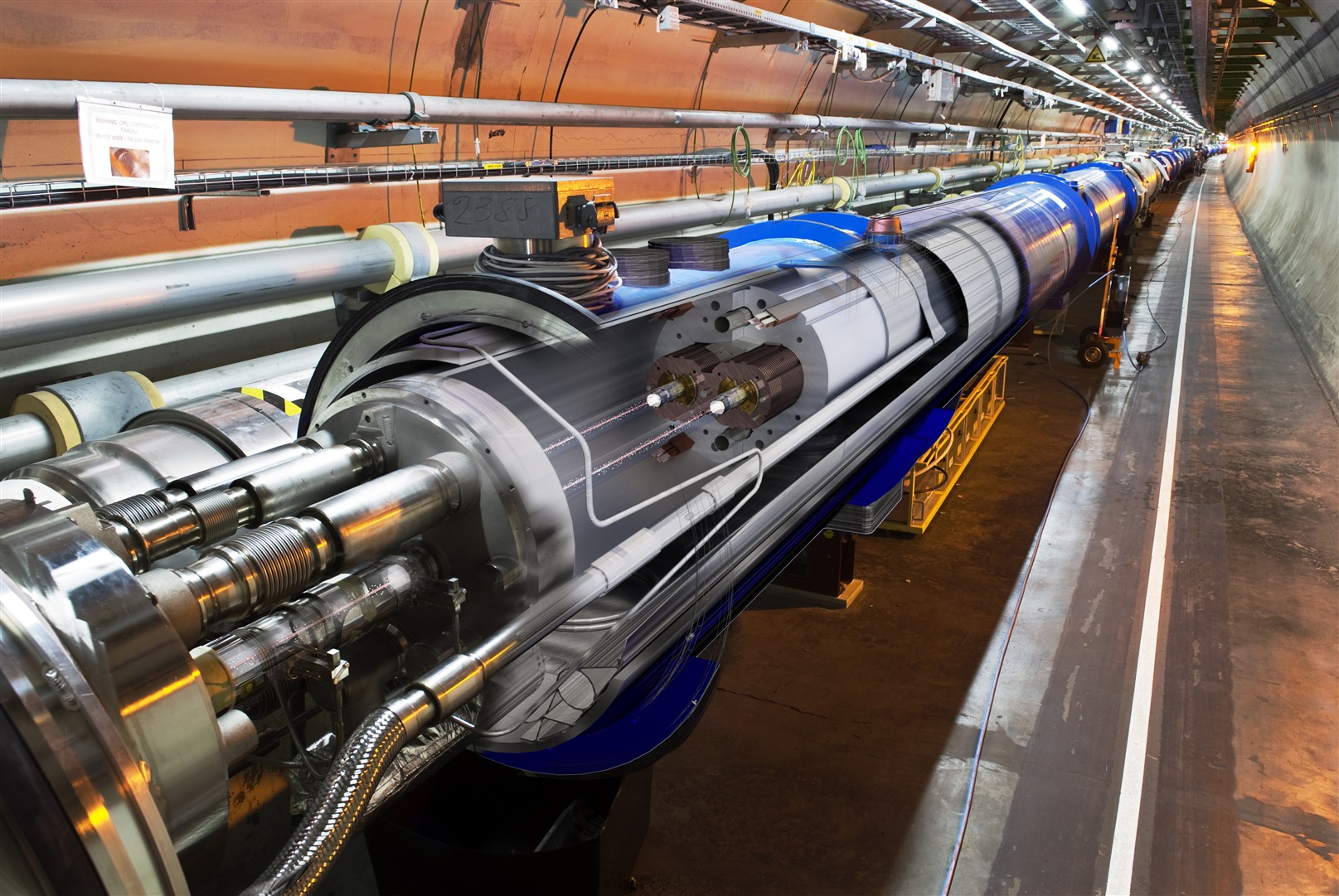

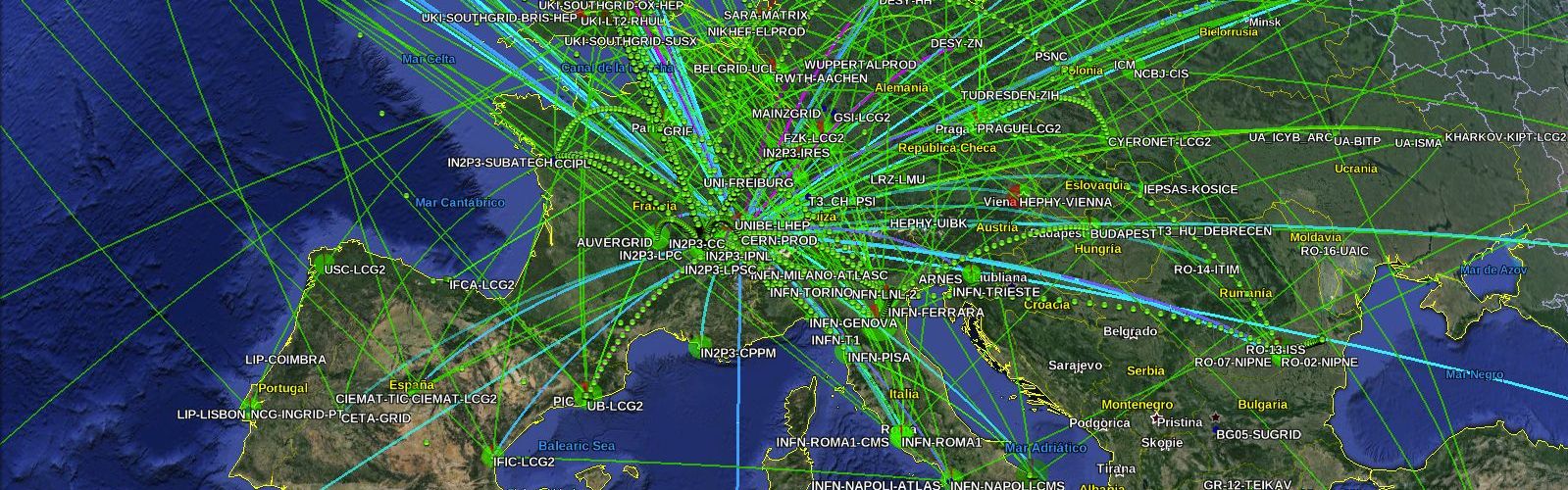

The enormous amounts of data produced by the LHC experiments require special, worldwide distributed computing resources for data reconstruction, simulation and data analysis. PIC operates a WLCG Tier-1 center that supports the ATLAS, CMS and LHCb experiments, as well as a fraction of the Spanish ATLAS Tier-2 center. A Tier-3 facility for ATLAS is provided, which helps the local physicists in their data analysis. Around 80% of the deployed PIC resources are exploited by the LHC activities. The PIC team holds several responsibility positions within WLCG, and in the LHC experiments, actively contributing to the core computing areas. The group provides support for the research group at IFAE for the T2K Neutrino experiment, and support for the exploitation of Grid resources for design studies of PET devices (VIP project). Technical support is provided to integrate experiment workflows to the Grid, when necessary.

Astrophysics and Cosmology

The Astrophysics and Cosmology works in collaboration with several group within the scientific community dedicated to the investigation of astronomical concepts. Major experiments in the area of Astrophysics are CTA and MAGIC, investigating high energy gamma ray sources through secondary Cherenkov radiation. DES, PAU and Euclid are cosmological surveys searching for dark energy and dark matter evidences through optical and near-infrared imaging. MICE supports cosmological surveys by simulating the dynamics of dark matter structures at very large scales and deriving galaxy catalogs from the resulting dark matter maps.

The Astrophysics and Cosmology works in collaboration with several group within the scientific community dedicated to the investigation of astronomical concepts. Major experiments in the area of Astrophysics are CTA and MAGIC, investigating high energy gamma ray sources through secondary Cherenkov radiation. DES, PAU and Euclid are cosmological surveys searching for dark energy and dark matter evidences through optical and near-infrared imaging. MICE supports cosmological surveys by simulating the dynamics of dark matter structures at very large scales and deriving galaxy catalogs from the resulting dark matter maps.

The data management for those collaborations covers the whole pipeline starting from data acquisition through analysis to data publication. The scientists and engineers of the group are involved in different technical and scientific aspects of the exploitation of massive data sets coming from simulations and terrestrial or satellite telescopes .

Applied AI

Artificial intelligence (AI) has experienced a boom in the past decade, thanks to the increased availability of computational power and data. Research groups in virtually all scientific fields, from astronomy to biology to material science, have started to exploit the benefits of Machine Learning (ML) combined with their state of the art experimental techniques, reaching new levels of precision or revealing new capabilities.

The Applied AI group at PIC works in collaboration with researchers from several different disciplines developing methods for data analysis based on ML techniques. With a strong focus on interdisciplinary cross-fertilization, the group develops ML models for analyzing galaxy images, advanced optical microscopy or electron microscopy data.

Euclid – A space mission to map the Dark Universe

Euclid – A space mission to map the Dark Universe

PAU – Physics of the accelerating Universe

PAU – Physics of the accelerating Universe

DES – Dark Energy Survey

DES – Dark Energy Survey

T2K – Tokai to Kamioka neutrino experiment

T2K – Tokai to Kamioka neutrino experiment

VIP – Voxel Imaging PET

VIP – Voxel Imaging PET

UAB Research Park

UAB Research Park